Efficient Deep Learning: A Practical Guide - Part 0

The Need for Smaller Networks

Introduction to the Series

Over the past decade, deep learning has driven breakthroughs in fields such as computer vision, natural language processing, and reinforcement learning. However, these advancements have come at a cost: neural networks are becoming increasingly large and computationally expensive. While larger models often deliver higher accuracy, they also present significant challenges in terms of storage, inference speed, and energy consumption.

To bridge this gap, model compression techniques have emerged as a powerful solution. These methods enable us to reduce the size and computational cost of neural networks without significantly sacrificing performance. Whether you're optimizing models for mobile deployment, real-time inference, or cloud efficiency, compression techniques are essential for building faster, lighter, and more scalable AI systems.

This series of articles will explore various techniques to optimize neural networks, providing both theoretical insights and hands-on examples using FasterAI, a framework designed to make neural network compression easy and efficient.

In this introductory article, we will explore:

- How deep learning models have grown in size over time

- The key challenges posed by large models

- Why model compression is essential for the future of AI

The Explosive Growth of Model Sizes

Over the past decade, deep learning models have grown exponentially, driven by the pursuit of higher accuracy, broader generalization, and emergent capabilities. Early architectures like ResNet-50 (2015) had 25 million parameters, but by 2018, models like BERT exceeded 100 million, and by 2020, GPT-3 reached 175 billion. Today, cutting-edge models are scaling into the trillions of parameters, requiring unprecedented computational resources.

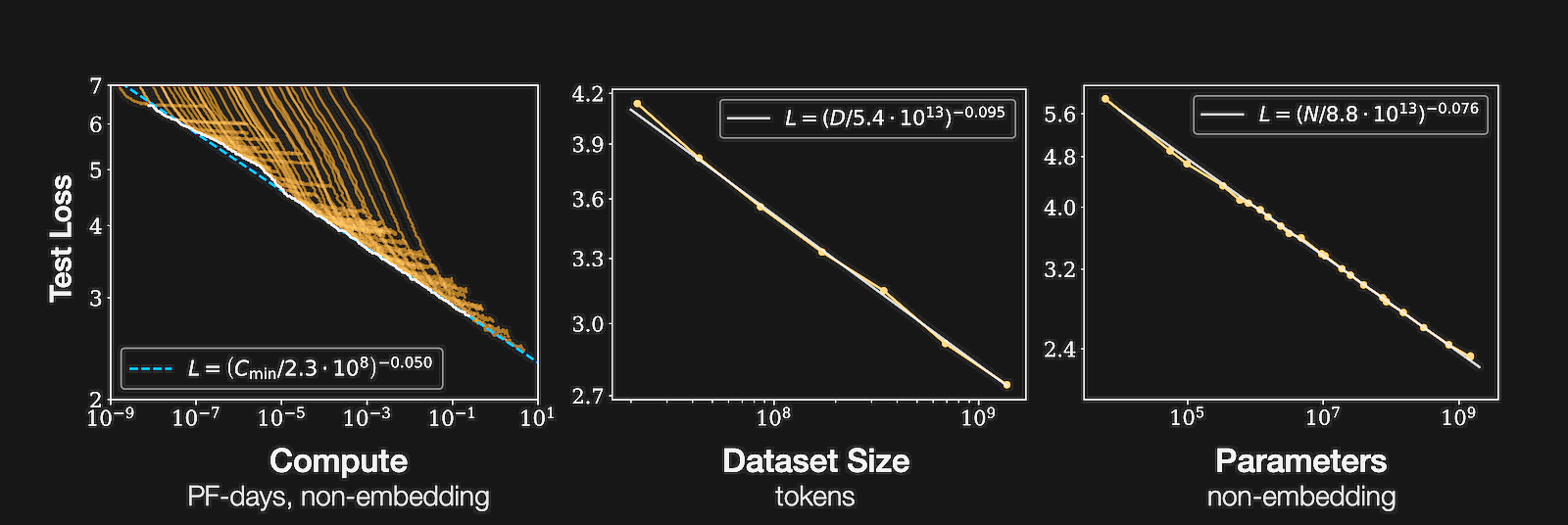

This trend follows what researchers call scaling laws, which suggest that as we increase model size, dataset size, and compute power, performance continues to improve in a predictable way. Studies, particularly those from OpenAI and DeepMind, have demonstrated that larger models not only achieve lower error rates but also develop more sophisticated reasoning and generalization abilities. As a result, the industry has been engaged in a continuous arms race, scaling models to unprecedented sizes to unlock new capabilities.

Yet, despite these remarkable gains, scaling comes with significant challenges. Larger models require exponentially more computational resources, making them slow, expensive, and energy-intensive to train and deploy. The increasing size of AI systems is also raising concerns about accessibility, as only a handful of organizations with vast computing infrastructure can afford to build and use such models. Moreover, while scaling has proven effective so far, recent research suggests that returns may be diminishing, emphasizing the need for more efficient architectures and optimization techniques.

The Challenges of Large Models

1. Slow and Expensive

As neural networks grow larger and more complex, their computational requirements increase exponentially, posing significant challenges for real-time applications and resource-constrained devices like smartphones, edge devices, and embedded systems. These large models demand substantial hardware resources to perform inference efficiently.

For example, GPT-3, with its 175 billion parameters, requires multiple high-end GPUs to generate a single response within a reasonable timeframe. This reliance on specialized hardware not only limits accessibility but also makes deployment on devices with lower computational capacity—like mobile phones or IoT devices—virtually impossible. In contrast, running such models on standard CPUs is not only slow but often impractical for real-time scenarios.

2. High Energy Consumption

As deep learning models continue to grow in size, their energy consumption has surged dramatically, raising concerns about operational costs and environmental impact. Training and deploying these models require massive computational resources, which translates into significant electricity usage and carbon emissions.

For instance, a single training run of GPT-4 is estimated to consume as much electricity as thousands of homes over an entire year. This power demand is not only costly but also unsustainable in the long run. Beyond training, the deployment of AI models at scale necessitates specialized data centers equipped with high-performance GPUs and TPUs, further increasing energy consumption.

3. Memory and Storage Limitations

The rapid growth of neural network architectures has led to models with billions or even trillions of parameters, posing significant memory and storage challenges. These large-scale models demand specialized hardware to run efficiently and require vast storage capacity, making them difficult to deploy in real-world scenarios.

One major issue is that such massive models often exceed the memory capacity of typical consumer devices, such as smartphones, edge devices, and embedded systems. Without substantial memory optimization, running these models on resource-constrained hardware is virtually impossible.

Additionally, deploying these models requires high-end GPUs with large VRAM, further restricting their usability to organizations with significant computing resources. The demand for storage also skyrockets—models like GPT-4 and other modern architectures require terabytes of storage, making model distribution and updates increasingly challenging.

4. Growing Demand for AI in Embedded and Real-World Applications

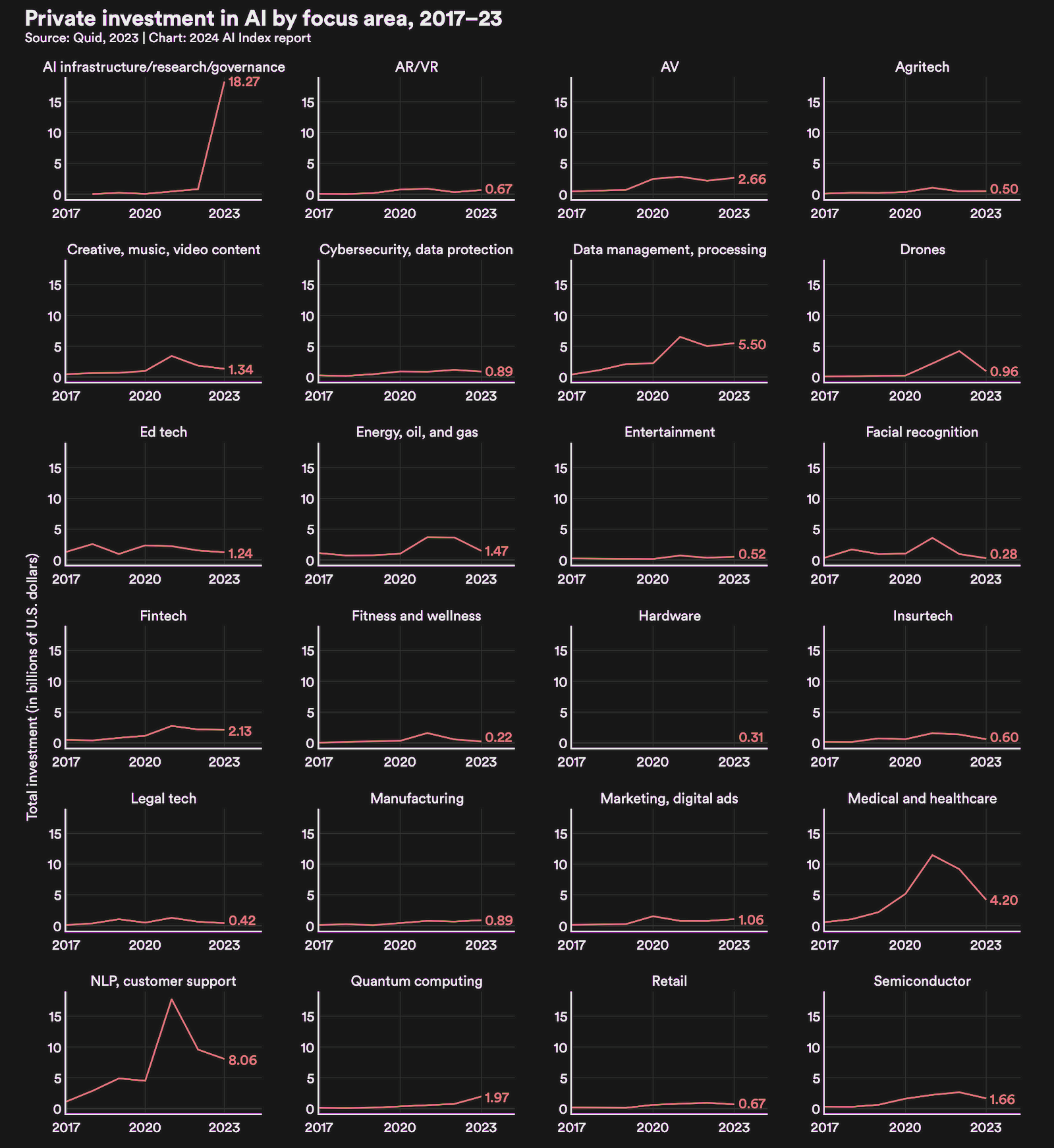

As AI technology advances, investment is increasingly shifting toward real-world applications that require efficient, on-device processing. Many modern AI-powered industries need models that can run locally on embedded systems, rather than relying on cloud computing.

This shift is driven by several critical factors:

- The need for real-time inference with minimal latency.

- Constraints in power consumption and compute resources on edge devices.

- Privacy concerns, where sensitive data cannot be sent to the cloud due to regulations like GDPR and HIPAA.

Despite these pressing needs, most state-of-the-art models remain too large and computationally intensive to be deployed efficiently on embedded hardware.

Why Model Compression is the Future

Given these challenges, model compression has become an essential area of research and innovation. The goal is to reduce model size and computational requirements while maintaining performance.

Some of the key techniques include:

- Knowledge Distillation – A smaller student model learns to mimic the predictions of a larger teacher model, preserving much of its performance with fewer parameters.

- Quantization – Reduces the precision of numerical representations (e.g., from 32-bit floating point to 8-bit integer), significantly lowering memory usage and speeding up inference with minimal accuracy loss.

- Sparsification – Converts dense neural networks into sparse ones, where only a fraction of weights remain active, enabling faster computation on specialized hardware.

- Pruning – Identifies and removes redundant neurons and connections, reducing model size without drastically affecting performance.

- Low-Rank Factorization – Decomposes large weight matrices into smaller components, reducing computation without compromising expressiveness.

These methods allow us to create smaller, faster, and more efficient AI models that are suitable for real-world deployment.

While scaling laws have driven model growth, the future of AI depends on making models not just bigger, but smarter and more efficient. Model compression techniques will be key to ensuring AI remains accessible, cost-effective, and deployable in real-world applications.

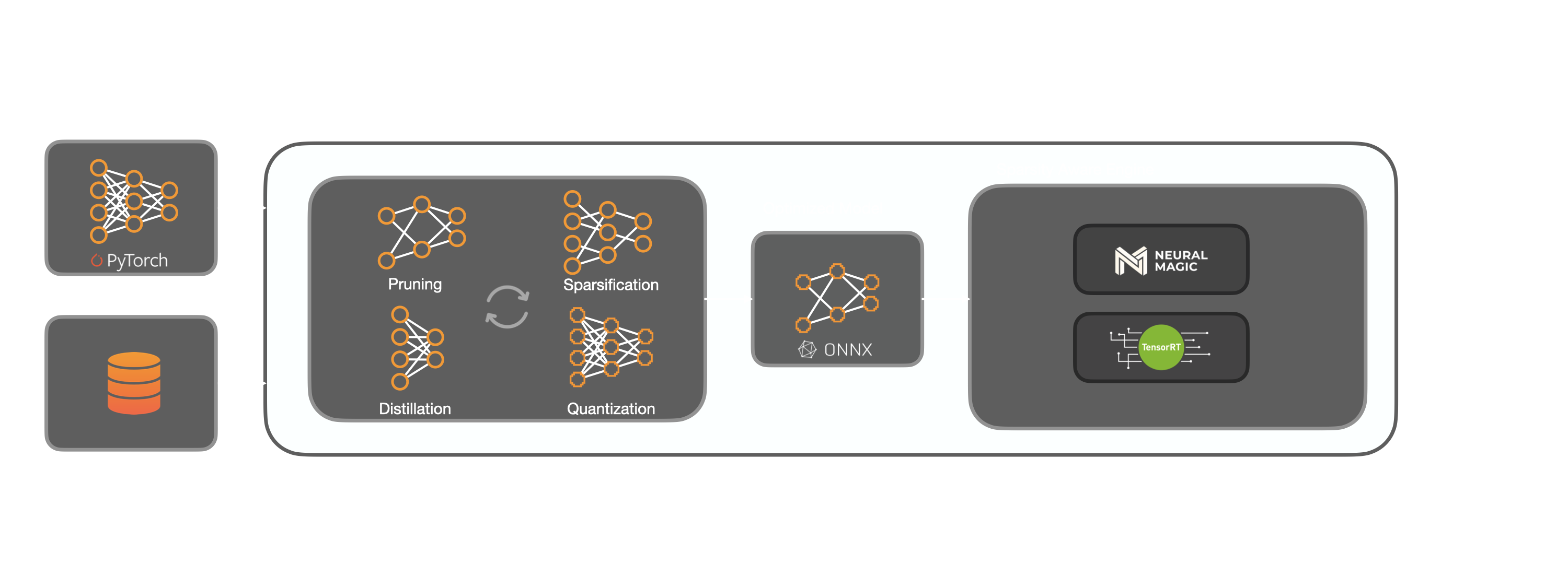

How FasterAI Helps Drive Model Compression

While model compression techniques have been well studied, applying them effectively in real-world projects remains a challenge. Many existing solutions require deep technical expertise, manual tuning, and fragmented tooling, making it difficult for researchers and developers to optimize models efficiently. This is where FasterAI comes in.

FasterAI is designed to make model compression techniques more accessible, automated, and practical for deployment. It combines powerful open-source tools with a streamlined workflow, allowing developers and researchers to easily apply state-of-the-art compression methods.

With FasterAI, you can:

- Easily implement knowledge distillation, quantization, and pruning.

- Benchmark compressed models against standard baselines.

- Optimize inference performance while maintaining accuracy.

- Integrate models into modern AI hardware accelerators like DeepSparse and TensorRT.

Conclusion

The rapid expansion of neural network sizes has created major bottlenecks in AI deployment. As AI adoption grows, model compression techniques will be critical for making deep learning more efficient and accessible.

This article lays the foundation for our series on neural network optimization. In the next part, we will explore Knowledge Distillation, one of the most effective methods for reducing model size while maintaining high performance.

Join us on Discord to stay tuned! 🚀